On YouTube Audio Extraction

One of the first big integrations I settled on while developing isobot was YouTube. At the time, YouTube Music bots were pervasive in almost every community and server. It seemed like just about every bot had to have the ability to play a song, or, for some of the more complex ones, an entire song queuing system!

I initially tried looking around at other open-source bots to see how they were implementing such a feature. There seemed to be roughly 3 approaches:

- Download the video with youtube-dl and extract the audio with ffmpeg

- Use the YouTube Developer API

- Use Lavaplayer

Each of these approaches came with its own problems, some more than others.

youtube-dl and ffmpeg⌗

Isobot’s server is written in Go, and I’m already a bit apprehensive to use a wrapper for both ffmpeg and youtube-dl. You’re between a rock and a hard place. Either accept cgo into your life or be forced to deal with piping stdout from a spawned process to handle errors.

While neither of those choices is in and of itself a deal-breaker, how YouTube allows you to download videos in the first place was.

When downloading a YouTube video, you have to start by picking a format. Running youtube-dl with the -F argument can give you an idea of what formats are available for a given video:

❯ youtube-dl -F https://www.youtube.com/watch\?v\=dQw4w9WgXcQ

[youtube] dQw4w9WgXcQ: Downloading webpage

[youtube] dQw4w9WgXcQ: Downloading player d2cc1285

[info] Available formats for dQw4w9WgXcQ:

format code extension resolution note

249 webm audio only tiny 46k , webm_dash container, opus @ 46k (48000Hz), 1.18MiB

250 webm audio only tiny 61k , webm_dash container, opus @ 61k (48000Hz), 1.55MiB

140 m4a audio only tiny 129k , m4a_dash container, mp4a.40.2@129k (44100Hz), 3.27MiB

251 webm audio only tiny 129k , webm_dash container, opus @129k (48000Hz), 3.28MiB

394 mp4 256x144 144p 67k , mp4_dash container, av01.0.00M.08@ 67k, 25fps, video only, 1.71MiB

160 mp4 256x144 144p 71k , mp4_dash container, avc1.4d400c@ 71k, 25fps, video only, 1.81MiB

278 webm 256x144 144p 89k , webm_dash container, vp9@ 89k, 25fps, video only, 2.27MiB

133 mp4 426x240 240p 117k , mp4_dash container, avc1.4d4015@ 117k, 25fps, video only, 2.96MiB

395 mp4 426x240 240p 133k , mp4_dash container, av01.0.00M.08@ 133k, 25fps, video only, 3.37MiB

242 webm 426x240 240p 159k , webm_dash container, vp9@ 159k, 25fps, video only, 4.02MiB

134 mp4 640x360 360p 219k , mp4_dash container, avc1.4d401e@ 219k, 25fps, video only, 5.55MiB

396 mp4 640x360 360p 259k , mp4_dash container, av01.0.01M.08@ 259k, 25fps, video only, 6.57MiB

243 webm 640x360 360p 273k , webm_dash container, vp9@ 273k, 25fps, video only, 6.91MiB

135 mp4 854x480 480p 337k , mp4_dash container, avc1.4d401e@ 337k, 25fps, video only, 8.52MiB

244 webm 854x480 480p 397k , webm_dash container, vp9@ 397k, 25fps, video only, 10.05MiB

397 mp4 854x480 480p 448k , mp4_dash container, av01.0.04M.08@ 448k, 25fps, video only, 11.33MiB

136 mp4 1280x720 720p 655k , mp4_dash container, avc1.4d401f@ 655k, 25fps, video only, 16.57MiB

247 webm 1280x720 720p 699k , webm_dash container, vp9@ 699k, 25fps, video only, 17.69MiB

398 mp4 1280x720 720p 889k , mp4_dash container, av01.0.05M.08@ 889k, 25fps, video only, 22.48MiB

399 mp4 1920x1080 1080p 1649k , mp4_dash container, av01.0.08M.08@1649k, 25fps, video only, 41.69MiB

137 mp4 1920x1080 1080p 3546k , mp4_dash container, avc1.640028@3546k, 25fps, video only, 89.65MiB

18 mp4 640x360 360p 594k , avc1.42001E, 25fps, mp4a.40.2 (48000Hz), 15.04MiB (best)

Each of these formats has a corresponding googlevideo.com/videoplayback URL that will provide you with raw access to the file content of that format. Sadly, the API is incapable of handling timestamps. It does, however, support range requests. And if you’ve ever looked at what YouTube itself is doing while a video is loading, you’d notice many requests in the Networking Tab fetching data from googlevideo.com/videoplayback with an incrementing range query parameter.

On the surface, this seems like a non-issue in the context of youtube-dl. Their documentation shows examples of how to splice out a subsection of a video based on timestamps. But this is really just downloading the full video and then passing it to ffmpeg to slice up. While this might be acceptable for smaller bots with fairly constrained use cases and appropriate rate limits to accommodate the extra overhead, the parallelism and performance constraints of isobot can’t afford this cost.

YouTube Developer API⌗

Using the YouTube Developer API removes the necessity for youtube-dl. This saves you from needing to extract URLs from raw HTML and JavaScript, but won’t save you from still needing to use ffmpeg to splice up the video after downloading it.

However, this is inherently risky. Their developer policy states:

Don’t use YouTube’s API to:

[…]

Offer users the ability to download or separate audio tracks or allow users to modify the audio or video portions of a video.

A few months ago, some high-profile music bots were issued Cease & Desist orders from YouTube because they

modified the service and used it for commercial purposes

It’s not entirely clear if the music bots in question were using the developer API, but it is highly likely. Notably exempt from the string of Cease & Desists were music bots that did not interact with the developer API. Most of which are very popular and are still running today without incident.

Lavaplayer⌗

Lavaplayer is an open-source alternative that solves most of these problems. It’s written in Java and is capable of streaming the audio instead of downloading the video fully.

- The overheard is low (350kb per song)

- It can start at a timestamp without needing to download the whole file

- Doesn’t depend on

ffmpegoryoutube-dl - Doesn’t use the (potentially slow) disk

However, there’s a problem. It’s written in Java. And interop between Go and Java is basically non-existent outside of just using RPC/HTTP. There are projects like Lavalink which gave Lavaplayer an HTTP API, but the weight of these two massive projects would likely be a nightmare to debug or profile performance issues. Not to mention the tech debt involved by including these two into the core runtime of isobot.

With this in mind, I started writing a simple implementation in Go to see how easy it would be to implement such a feature without using Lavaplayer. It similarly needed to be fast, highly parallel, with low overhead, and no reliance on cgo or spawning other processes.

A foray into Matroska⌗

The first task was figuring out how to get the formats available for a given YouTube video.

This could be as simple as parsing a JSON response from youtube.com/youtubei/v1/player, or if the video is age-restricted, as complicated as downloading the HTML contents of the webpage and using a set of complicated and ever updating regexes to extract a similar JSON payload. Needless to say, this seems like a beast to maintain. Luckily, there’s a fantastic library already written in Go that does exactly this.

With a way to fetch formats, it’s time to pick one.

For Discord, their Bot API specifies that:

Voice data sent to discord should be encoded with Opus, using two channels (stereo) and a sample rate of 48kHz.

Conveniently, YouTube videos include a 48kHz Opus encoded audio-only format that is packaged inside of a webm, a subset of the Matroska file format.

This saves a lot of re-encoding work, but there is still a problem. Even though the format is only 48kHz Opus frames, the whole file can still exceed gigabytes if the video is very long.

As an example, here is the audio-only format for a 24-hour nature sounds video:

[...] audio only tiny 132k , webm_dash container, opus @132k (48000Hz), 1.33GiB

That is much too large to ever dream of holding in memory for the duration of the audio, let alone just to take a subsection from. And since the googlevideo.com/videoplayback API doesn’t support timestamp requests, it’s necessary to translate timestamps into range requests.

Luckily, webm headers contain precisely this data. Using MKVToolNix we can take a look at its structure.

EBML head

EBML version: 1

EBML read version: 1

Maximum EBML ID length: 4

Maximum EBML size length: 8

Document type: webm

Document type version: 4

Document type read version: 2

Segment: size 3828201

Seek head (subentries will be skipped)

Segment information

Timestamp scale: 1000000

Duration: 00:03:53.281000000

Multiplexing application: google/video-file

Writing application: google/video-file

Tracks

Track

Track number: 1 (track ID for mkvmerge & mkvextract: 0)

Track UID: 69537484208299452

Track type: audio

"Lacing" flag: 0

Codec ID: A_OPUS

Codec's private data: size 19

Codec-inherent delay: 00:00:00.006500000

Seek pre-roll: 00:00:00.080000000

Audio track

Sampling frequency: 48000

Channels: 2

Bit depth: 16

Cues (subentries will be skipped)

Cluster (subentries will be skipped)

We can see 2 main sections here, EBML Head, and Segment. The part we’re interested in is Segment, particularly in 2 of its sub-sections. Cues and Cluster.

Cues is an array of Cue Point, each of which contains 2 important values.

-

Cue Time: increments in a set interval based on theTimestamp Scaleas defined inSegment Information. In our case, this is1000000, or 10 seconds. -

Cue Cluster Position: is an offset from the beginning ofSegmentto aCluster, which holds the actual Opus frames we’re looking for beginning at the correspondingCue Time.

The entire webm header, including the Cue section, is usually contained within the first 1kb of the file. And we can even roughly predict the header’s size given the duration of the video, which we can also retrieve alongside the formats.

A First Swing⌗

At this point, we have all the information we need to get an initial implementation working. The rough plan is:

- Extract Formats and Video duration using this library

- Use the duration to request ~1kb of the beginning of the file

- Parse the initial header and iterate through the

Cue Points until we find theCue Cluster Positions that correspond to both our start and end timestamp. - Use the

googlevideo.com/videoplaybackAPI to make arangerequest from our startingCue Cluster Positionto our endCue Cluster Position - Stream the content while decoding the

webmto extract the Opus frames to send off Discord, or whatever else is consuming the frames.

Decoding MKVs in Go turned out to be more of a pain than initially expected. There are many libraries available, however, none of them had everything required. Most simply weren’t able to handle streaming the data as it was available and required the full file present at the time of parsing. At the end of the day, I ended up having to make my own, while borrowing many parts from go-mkvparse.

And it worked! First, a request is made for ~1kb, then a follow-up request for a specific range using the Cue Cluster Positions that contains the Opus frames. This means that even if we want to start playing a song 23h5m into a 24h long song, we will instantly have data to work with, and the overhead will be extremely minimal.

But there’s still got a problem. Reading the body of the response slowly doesn’t mean the file is not buffering in memory. The Linux TCP stack will buffer the data received until it’s read. This means there’s no control of how much memory the request is taking up unless you start tweaking kernel parameters. What’s more, the googlevideo.com/videoplayback API will kill off requests that take too long to fully download the requested range depending on the video length.

A More Refined Solution⌗

At the beginning of this post, I mentioned that if you watched your Networking Tab while a YouTube video plays,

you’d notice many requests in the Networking Tab fetching data from

googlevideo.com/videoplaybackwith an incrementingrangequery parameter

I was initially hesitant to use such a method. I was fairly certain such a thing would get rate limited if many videos were playing in parallel. However, after further testing, this seems to not be the case. And seeing as there are no rate-limiting headers returned, I’m much more comfortable using this approach.

I started by setting the chunk size to 1MB. Which is then parsed and extracted into Opus frames that are submitted to a transmit queue which holds 5 seconds worth of frames. Eventually, when the next frame attempts to read more data than what is left in the chunk, another is requested. And this works great! Assuming of course that it’s possible to read the next chunk before the 5-second queue runs out.

Yeah, turns out this is going to be a problem…⌗

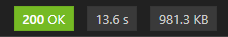

After some days of using this in production with no issues, videos started popping up that would randomly stop in the middle of playing with no reported errors. Luckily, this was repeatable. The videos in question would consistently quit after about 50 seconds of playtime. After a bit of debugging, I captured the screenshot above. A 1MB chunk of audio data was taking over 14 seconds to be fully read from googlevideo.com/videoplayback.

This probably wouldn’t be an issue if the next chunk was requested immediately after the first, considering it holds ~50 seconds worth of Opus frames. But the queue only holds 5 seconds to keep overhead low. While the chunk is slowly processed and submitted to the queue, once there is no data remaining the next chunk is requested. Causing a potentially >14s request to be made with only 5s of runaway in the queue remaining.

The solution to this is pretty trivial. Lengthen the queue and shrink the chunks. Right now it’s sitting at a happy medium of a 10s frame buffer, and 100kb chunks. By keeping the chunks smaller it requests data more frequently, and thus takes less time to be served the data.

And this is where the implementation sits today. All Get Audio From YouTube Video nodes use this underlying implementation, and you can try it out yourself by importing this blueprint into your server!